[TIL] Deploy AI Model Developed w/ Flask API Using AWS EC2 & Connect to Nest.js Project

06/20/23

Using AI Model for Emergency Level Prediction: EC2 Server Scale Up

t2.micro (free tier)

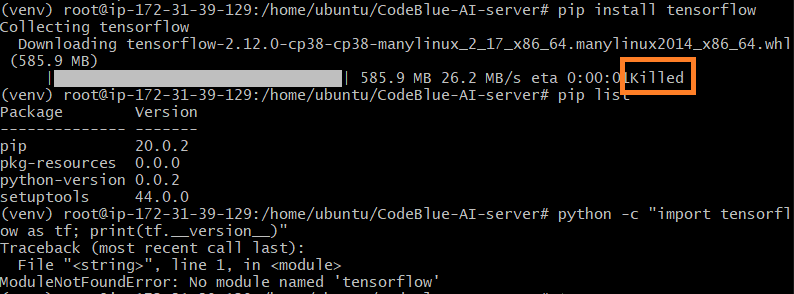

🚫 Killed: Indicates that the process was terminated by the operating system. ⇒

pip install tensorflowwas killed by the OS. It was interrupted due to insufficient memory on the AWS EC2 free tier instance.

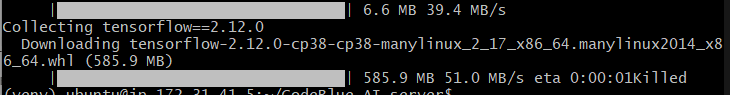

t3.small

🚫 Similar to t2.micro, it was killed.

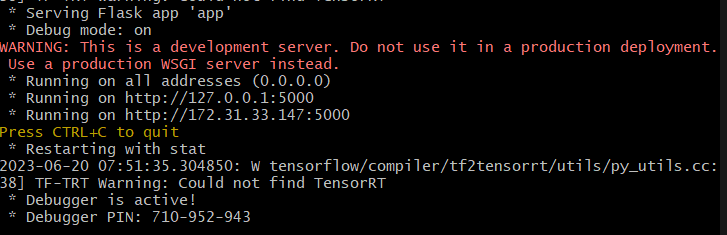

t3.medium

✅

pip install tensorflowsuccessfully installed TensorFlow. In the case of the Flask API, it does not require GPU since it loads a pre-trained model for predicting emergency levels. Therefore, a CPU EC2 instance is sufficient.

Additional commands executed on the EC2 instance / Installed modules:

sudo apt updatesudo apt install python3-pipsudo apt-get install python3-venvsudo apt-get install default-jdk⇒ JDK installation for usingkonlpysudo pip install konlpypip install intel-tensorflow==2.12.0(Installed to address version-related errors)pip install -U keras_applications==1.0.6 --no-depspip install -U keras_preprocessing==1.0.5 --no-deps

Connecting to the EC2 Server Instance

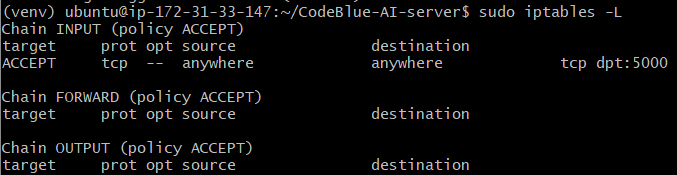

Port forwarding

sudo iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 80 -j REDIRECT --to-port 5000

My apologies for the mistake... I kept accessing the t3.small IP address instead of t3.medium. It was a silly mistake, not a troubleshooting issue.

Running the Flask server in the background

nohup python -uapp.py&⇒ Run in the backgroundtail -f nohup.out⇒ Check the logslsof -i :5000⇒ Check the PIDsudo kill -9 [PID]⇒ Terminate the running process