📌 What I did today

The necessity of BERT as a learning model

BERT is a learning model that takes an existing model and fine-tunes it to fit our needs. Fine-tuning is a technique where a pretrained model is updated to align with downstream tasks. Models trained with domain-specific data tend to perform better.

Selection of BERT huggingface transformers model

bert-base-multilingual-cased bert-base-multilingual-cased is a model specialized in over 100 languages, but it doesn't seem to have extensive training on the Korean language. The total number of Korean vocabulary it was trained on is 119,546.

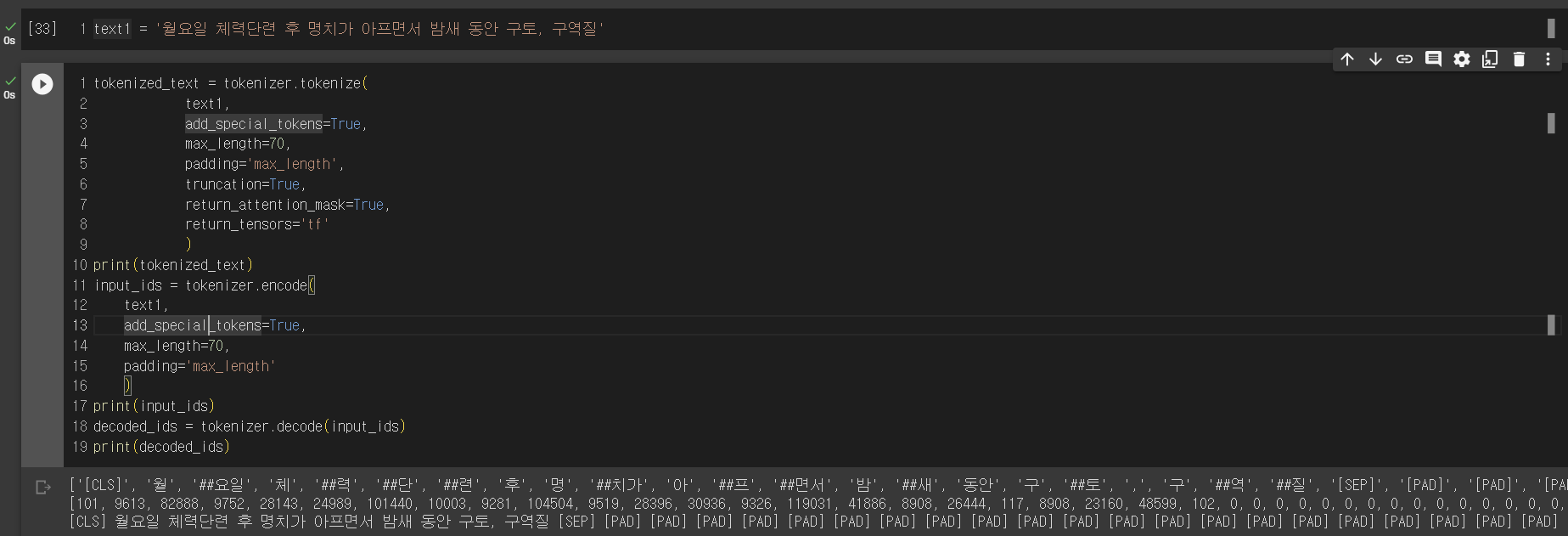

When using the raw tokenizer of BERT:

- KoBERT A model that overcomes the limitations of Google's bert-base-multilingual-cased for Korean language performance.

- Reference GitHub repositories: https://github.com/SKTBrain/KoBERT/tree/master/kobert_hf https://github.com/monologg/KoBERT-Transformers

\=> Selected KoBERT and currently implementing it.

AI training is really difficult... Feedback received during mentoring: accuracy >= 90!!! At least 90% accuracy is needed for meaningful free-text input.